Installing and configuring CloudWatch agents to send EC2 logs to Cloudwatch Logs

In my personal experience, if you are using AWS EC2 instances, the best way to pipe logs reliably is to use CloudWatch agents. Yeah, I know you can simply use rsyslog to send logs to a SIEM, but rsyslog makes it difficult to verify if it is actually working. Imagine having tens or even hundreds of instances — how are you going to track whether the rsyslog service is alive or dead on each instance?

With CloudWatch Agent, we can simply view the logs in CloudWatch Logs as a log group and log stream. Furthermore, piping logs from a CloudWatch log group to a SIEM is straightforward. Most SIEMs support CloudWatch Logs as an integration (e.g., Elastic SIEM AWS Integration) or can subscribe via Subscription Filters (Kinesis Data Stream → Graylog). Now that you understand the rationale, let’s dive in.

By default, most AMIs (non-Amazon ones) do not include CloudWatch Agent. Traditionally, ops teams either install it manually via yum/dnf on each instance or create custom AMIs with CloudWatch Agent baked in. However, if you already have a whole bunch of standalone servers running, you might want to consider batch installing it via SSM Run Command. Of course, this assumes that your instances are SSM-managed, which, at this point, they really should be.

Step 1: let’s install the agent on your instances.

If you are unsure whether your instances have the agent, just run this step anyway. There’s no harm in installing it again — no configuration will be overridden.

Go to Systems Manager -> Run command

Search for “AWS-ConfigureAWSPackage” command document. Select it.

Choose actions = install, then the package name = AmazonCloudWatchAgent. As for the instances, you can simply select manually for this demo. But you can use tags too.

After selecting the instances, just proceed to run command. You should see a page where it will track success or failure of this command on various instances. Side note, usually I would just uncheck the S3 output under Output section.

Step 2: configuring the agent

It would not make a lot of sense to use the cloudwatch agent wizard in each instance to set them up. So instead, we will rely on SSM Run Command again. In this example, I will be piping audit logs from instance to cloudwatch. In reality, you can pipe anything given the location of the log.

First, go to Parameter Store under Systems Manager. Create a new parameter.

The config should look something like this:

{

"logs": {

"logs_collected": {

"files": {

"collect_list": [

{

"file_path": "/var/log/audit/audit.log",

"log_group_name": "audit-event-logs",

"log_stream_name": "{instance_id}",

"retention_in_days": 3

}

]

}

}

}

}Take note of the parameter name, which we named as “cloudwatch-audit-log-config” here.

Next, go to SSM Run Commands and find the “AmazonCloudWatch-ManageAgent” document. Make sure you change the action to “configure (Append). This will not overide any past cloudwatch configurations, in case you forgot there were. In the “Optional Configuration Location”, paste in the parameter name.

Choose the instance you want to target:

And you should see the success message.

Finally, let’s do some verifications.

Go into one of the instance and execute this command:

cat /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.tomlYou should see something like this:

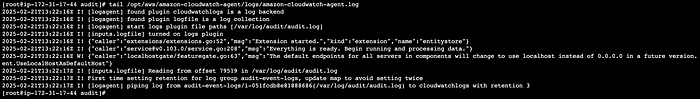

So the configuration did get pushed down. And you can also see that the logs are being piped with this:

tail /opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log

Side note, the instance IAM role needs to have the basic cloudwatch permissions such as

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents",

"logs:DescribeLogStreams"

],

"Resource": "*"

}

]

}And of course, you can also view it in Cloudwatch logs console itself:

In conlusion, this is how you can pipe any logs to cloudwatch via the agent. Just a small tip, if your log throughput is large, smaller instances like t3.micro/small may not be able to handle the volume on initial set up. From my experience, you can start with a t3.medium for a start, monitor the CPU and ram usage, then scale up and down after a day or 2.

Debugging FAQ

My instances are in private subnets in my VPC, it can’t reach the public logs.<region>.amazonaws.com url.

Sadly, you will have to deal with (pay) Amazon for their private endpoint.

For example, my instance here is in a private subnet

Without the logs endpoint, when I execute “dig logs.<region>.amazonaws.com, I will get the public IPs for the endpoint.

But once I create an endpoint in VPC endpoint

And I dig again, I will get